Angular development is great. It offers a great way to break problems into small, easily managed parts. With the Angular CLI, more power is at our fingertips. Narwhal Technologies Inc has created even more power by providing extensions to the Angular CLI. In this article, I will describe how to leverage the Nrwl Extensions to create and maintain flexible angular apps.

To learn how to install Nrwl, visit their getting started guide: https://nrwl.io/nx/guide-getting-started

Make sure to install Angular and Nrwl globally using npm. Here is a list of versions I used for this article:

node: 8.15.0

npm: 5.0.0

"@angular/cli": "~7.1.0"

"@nrwl/schematics": "7.4.0"

Creating an Nx Workspace

The Nx Workspace is a collection of Angular applications and libraries. When creating the workspace there will be a number of options available during generation. To start, run the following command:

create-nx-workspace <workspace-name>

This will begin the process of generating the workspace with the provided name.

After a few initial packages are installed, a prompt will display to choose the stylesheet format:

Use the arrow keys to choose between CSS, SCSS, SASS, LESS, and Stylus. After the desired format is highlighted, press Enter.

The next prompt to display is the NPM scope. This will allow applications to reference libraries using an npm scope. For example, given a library called ‘my-lib’ and the npm scope is ‘my’, an application can import the library with the following statement:

import { MyLibModule } from '@my/my-lib';

To learn more about npm scopes check out their documentation: https://docs.npmjs.com/about-scopes

After specifying an NPM scope, press Enter. A third prompt will appear to specify which package manager to use:

Use the arrow keys to choose between npm and Yarn. After the desired format is highlighted, press Enter.

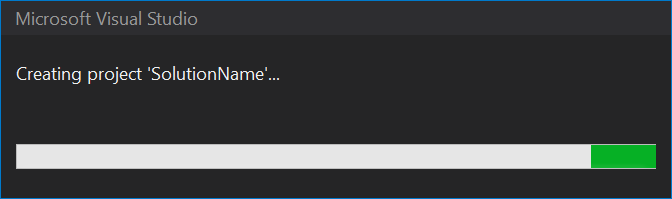

Now that the generation process has everything it needs, it will continue to create the folder structure, files, and configuration:

Project Structure

There are two important folders available after the workspace generation.

| Folder | Description |

| /apps | Contains a collection of applications in the workspace. |

| /libs | Contains a collection of libraries in the workspace. |

Adding Applications

Before adding an application with the CLI be sure to navigate into the workspace folder. In our example, the folder is ‘my-platform-workspace’. Then use the Angular CLI to generate the app:

PS C:\NoRepo\NxWorkspace> cd my-platform-workspace

PS C:\NoRepo\NxWorkspace\my-platform-workspace> ng g app <app name>

Tip

When using Visual Studio Code, open the Nx Workspace folder. This will default the command window to the necessary directory by default when using the built-in support called Terminals.

After adding the application, a number of prompts will display and the app generation will proceed:

Running the application can be done using the Angular CLI as usual:

PS C:\NoRepo\NxWorkspace\my-platform-workspace> ng serve my-first-app

When the app is done building, go to http://localhost:4200 from a browser and see the default view:

Adding a Library

Adding a library is as easy as adding an application with the following command:

ng g lib <library name>

Generally, a module should be created for libraries so they an be easily imported by applications. Once the library is created, components can be added to the library. Make sure to export any library components or other Angular objects (providers, pipes, etc) that need to be used by applications.

The Dependency Graph

Looking at package.json, there are a number of scripts that have been added. One that is nice to have is to generate and view a dependency graph of all of the applications and libraries in the workspace. A dependency graph can be generated using the following command:

npm run dep-graph

For example, I’ve added my-lib and my-lib2 to the my-first-app. This is the resulting dependency graph:

Here we can see that the my-first-app-e2e (end-to-end) test application is dependent on the my-first-app application. The application is dependent on the libraries my-lib and my-lib2. This is a very simple example. This gains more value as more applications share more libraries.

It is also possible to get the JSON version of the dependency graph which can be used in various creative ways to help automate your workflow. This is all thanks to Nrwl Extensions and the power of Nx Workspaces.